Foundation Models: The Cornerstones of Modern AI

Overview

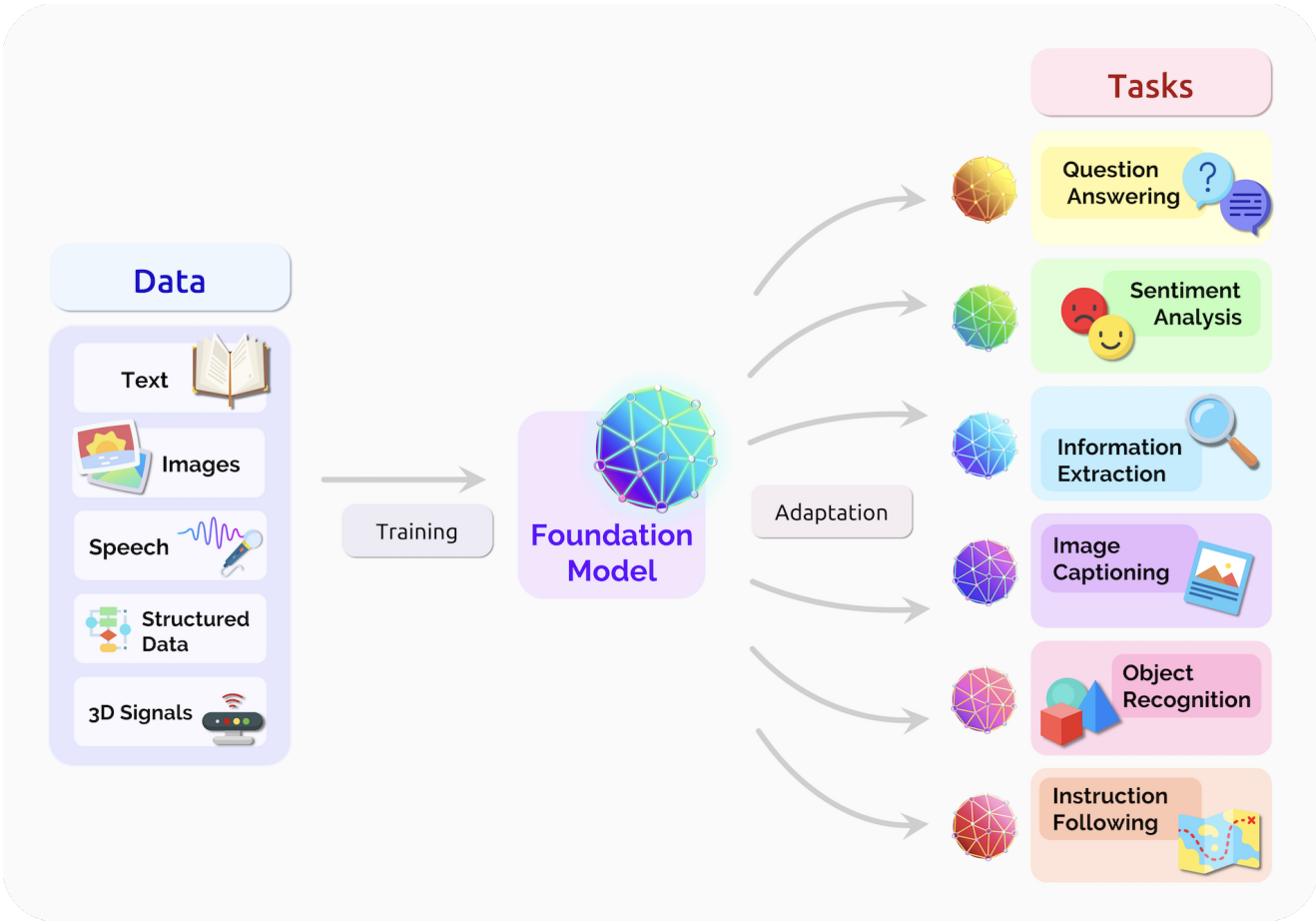

Foundation models (FM) are deep learning models trained on massive raw unlabelled datasets usually through self-supervised learning. FMs enable today’s data scientists to use them as the base and fine-tune using domain specific data to obtain models that can handle a wide range of tasks [1, 6, 7]. In this talk, we provide an introduction to FMs, its history, evolution, and go through its key features and categories, and a few examples. We also briefly discuss how foundation models work. This talk will be a precursor to the hands-on session that follows on the same topic.

Image source: 2021 paper on foundation models by Stanford researchers [1].

Image source: 2021 paper on foundation models by Stanford researchers [1].

In this session, we take a closer look at what constitutes a foundation model, a few examples, and some basic principles around how it works.

Outline

- Introduction to foundation models, its history and evolution

- Key features of foundation models

- Types of foundation models: Language, Vision, Generative, and Multimodal

- Examples of foundation models: BERT [3], GPT [4], YOLO [2], SAM [5], DALLE-2

- How do foundation models work?