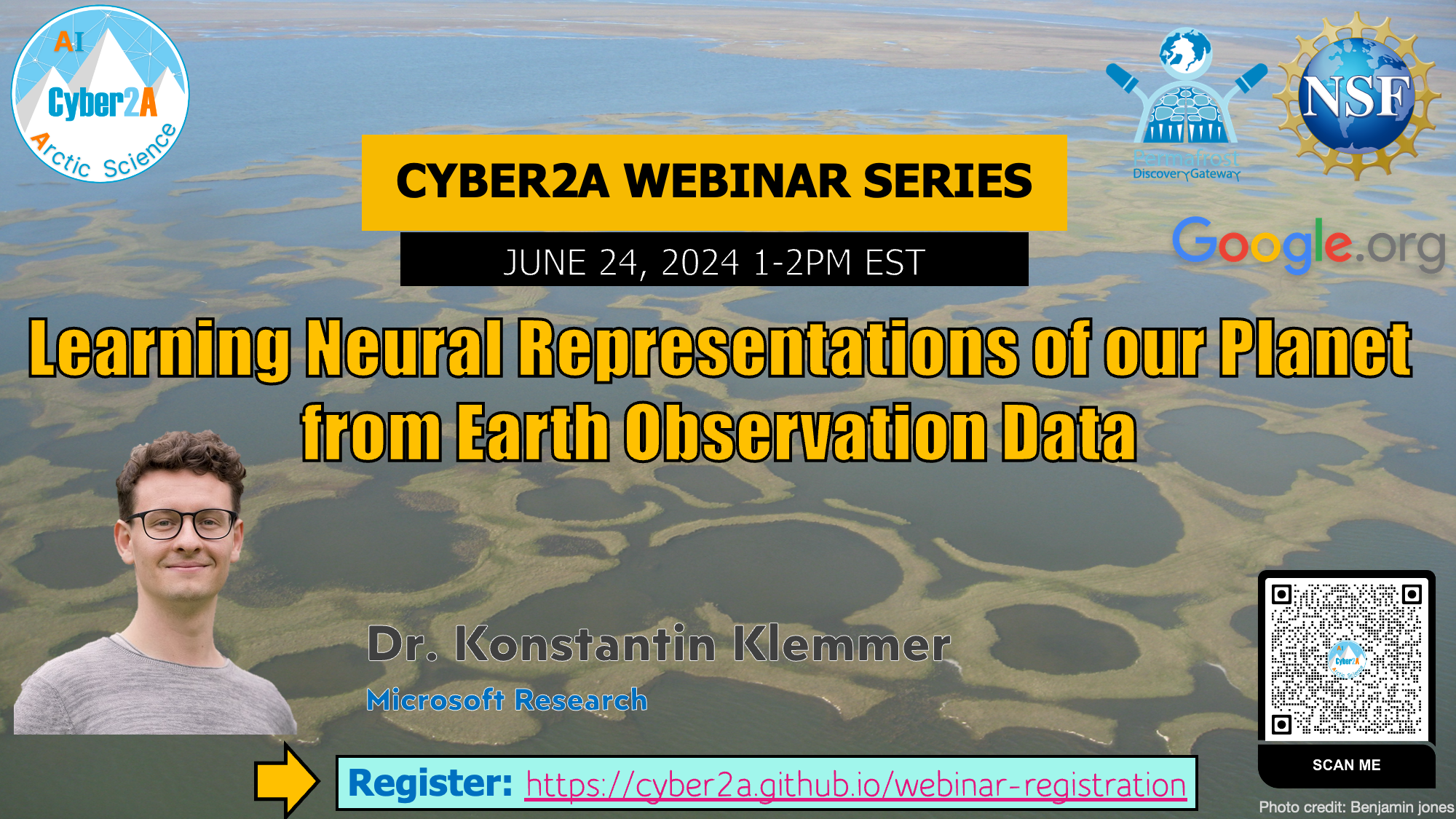

Learning Neural Representations of our Planet from Earth Observation Data

Abstract

Earth observation data captures the natural and built environment of our planet. It comes in different forms, from satellite images to in-situ measurements, but can be mapped into the same geometric space: the sphere of planet Earth. It can be crucial in supporting decision making in the private and public sector alike, from improving flood resilience to forecasting crop yields. But making sense of the vast amounts of Earth observation data is difficult as it is often unstructured, unlabeled and multi-modal. Neural networks have emerged as a powerful tool for processing such large quantities of unstructured data but are not equipped to handle the spatial and temporal dependencies and spherical geometry of Earth observation data. In this talk, I will present a new class of neural network models purpose-built for Earth observation data: geographic location encoders. These models combine the scalability of neural networks with geospatial domain knowledge and traditional intuitions from geodesy and spatial statistics. I will highlight how location encoders can be used for fast and accurate predictive modeling on the sphere, with applications in climate modeling and species distribution modeling. I will then present means of training location encoders in the absence of labels—using globally distributed satellite imagery and contrastive self-supervised learning. The resulting pretrained location encoders produce general-purpose location embeddings that learn the natural and physical characteristics of locations around the world. These embeddings are powerful features in downstream modeling and can help tackle long-standing challenges in geospatial machine learning such as geographic distribution shift. Finally, I will outline future challenges in AI for Earth and present an ambitious goal: “digitally twinning” our planet with the support of large-scale, self-supervised learning and Earth observation data.

Date and Time

June 24, 2024, 1:00 - 2:00 PM EST

Speaker Biography

Konstantin Klemmer is a postdoctoral researcher at Microsoft Research New England and part of the Machine Learning and Statistics group. His research focuses on the representation of geographic phenomena in machine learning methods, particularly in neural networks. Konstantin’s recent work includes the integration of notions of spatial dependency into neural networks and the unsupervised training of location encoders that learn characteristics of a given location and can be deployed in various downstream tasks. His work is motivated by real-world challenges such as climate change and increasing urbanization, combining technical and methodological research with application and deployment studies. Konstantin has a PhD in Computer Science and Urban Science from the University of Warwick and spent time as a visiting student at NYU, as an Enrichment student at the Alan Turing Institute and as a Beyond Fellow at TUM and DLR. He also holds a Master’s in Transportation from Imperial College London and University College London. Website: https://konstantinklemmer.github.io